With the proliferation of Cloud reliance, data security and governance issues have expanded to a whole new dimension.

Cloud computing now serves as the foundation for digital transformation in many organizations. Today, instead of asking, “are we moving to the Cloud?”, the burning question is, “how many cloud platforms should we use for our critical infrastructure?”

The answers vary, but large organizations employ an average of 4.8 public and private clouds, according to the RightScale 2018 State of the Cloud Report. However, with the proliferation of Cloud reliance, data security and governance issues have expanded to a whole new dimension.

Just imagine: DevOps teams working with multiple cloud providers are expected to conform to different rules and guidance from platform to platform. While cloud-agnostic engineers will be more commonplace in the future, finding DevOps people who can work with multiple platforms is extremely challenging today.

Furthermore, there is a hidden toll on maintaining both data security AND governance — and this is one area where multi-cloud is still in its infancy. Very few enterprises or SaaS companies have done the hard work to figure out how to make connections completely secure across their clients’ other cloud providers.

Security obsession across the clouds

No security team should ever be satisfied with existing security controls. For a committed and competent security team, this eccentricity is simply the symptom of the healthy paranoia that comes with being responsible for the protection of vital infrastructure and sensitive data.

For serious security testing, there are several components, including the audit of all the usual administrative and technical controls you would expect from a cloud company. Then there are the dreaded Penetration Tests which many companies avoid. In a security-first infrastructure, not only are pen tests ingrained in the routine, they are also extreme in the specifications in terms of frequency, methodology and transparency.

- Frequency

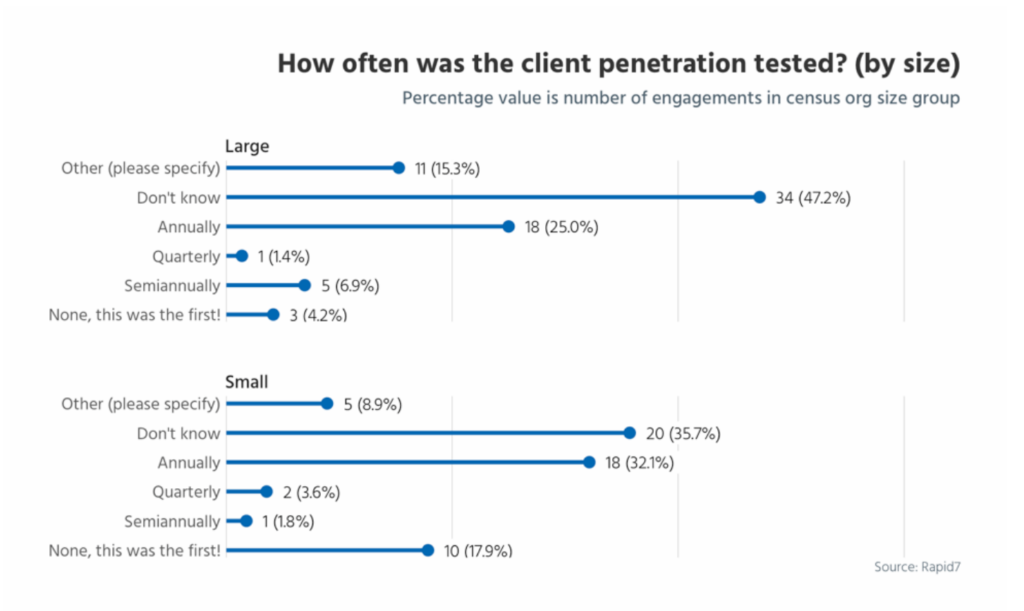

Most companies avoid penetration tests altogether. Others perform them annually at best, which is the minimum frequency required to meet the standards and certifications their auditors tell them they need. What many auditors do not challenge is whether or not adequate penetration testing has been performed after every ‘material change’ to the company’s product or infrastructure.

In a cloud environment where most vendors take pride in the frequent deployment of new features and functionality, it is unlikely that performing penetration tests annually would be sufficient. Because of these frequent changes, it is important to ensure your cloud vendors are performing frequent penetration testing to ensure no new vulnerabilities have inadvertently been introduced.

Cloud platforms that perform more than five penetration tests in six months are the exception, but the high frequency provides assurance that changes to the service, as well as newly-discovered vulnerabilities within other components of their environment, are not putting their customers and their data at risk.

- Methodology

Another aspect of security obsessiveness is the approach taken in managing penetration testers. To simulate the compromise of an employee’s or customer’s credentials, testers are provided with limited access to a non-production environment. Engagements run a minimum of two weeks and begin with providing the testers not only with the access credentials, but also with substantial information about the architecture, network design, and, when applicable, the application code. (This method is sometimes referred to as White Box Testing.)

If, after a specific period of time, the testers have not been able to find an entry point, a security-obsessed cloud vendor gradually provides the testers with slightly more access until they are able to uncover vulnerabilities, or until the time is up.- Why would such organizations divulge so much information? Basically, painful as it may be, this policy provides the business with visibility into what would happen if, for example, it had an insider attempting to gain unauthorized access to data. How far would the hacker get? How quickly could they be detected How would the organization contain the threat? And so on. The information is invaluable in making the most out of pen tests.

- Why would such organizations divulge so much information? Basically, painful as it may be, this policy provides the business with visibility into what would happen if, for example, it had an insider attempting to gain unauthorized access to data. How far would the hacker get? How quickly could they be detected How would the organization contain the threat? And so on. The information is invaluable in making the most out of pen tests.

- Transparency

One final mandate for security obsessiveness in cloud data security is the now-uncommon practice of sharing penetration test reports and remediation documentation with qualified prospects and customers (under NDA). By sharing these reports, a vendor is able to solicit additional feedback on ways to continually improve testing.

Such vendors know that transparency is the cornerstone to trust, and trust is the cornerstone to a healthy partnership with customers at every level. Providing the penetration test report and remediation evidence allows customers to see for themselves how seriously security is viewed as a whole, as well as how effective the vendor is at achieving and boosting it. This allows customers and prospects to make an informed decision about the risks they are taking with the vendor.

Governing access to safe data is equally critical

Keeping data safe from external and internal incidents is one thing, but strict governance around the access of this safe data is also critically important due to privacy laws and insider threats. Conversely, lack of access to the right data quickly when needed, can seriously hinder business agility and intelligence.

Here are five perennial best practices to ensure that data is democratized securely and optimally:

- DESIGN THE PRELIMINARY FRAMEWORK AND ESTABLISH A TEAM

The first step is to establish a core team of stakeholders and data stewards to do the preliminary work in creating a data governance framework. Next, perform a thorough audit, design a business case for data governance, then define guiding principles that pinpoint what is important. Establish decision rights that identify what decisions need to be made, who will be involved in making them, and how they will be made.

The core team can then form a governance council to clearly define roles and requirements: Who will own the program or implementation? Who will own certain types or areas of data? Who will own the policies? How will policies be enforced? - DEFINE REQUIREMENTS AND POLICIES

What does better data governance help the business to achieve? Is it better regulatory compliance, increased data security, improved data quality, or all three?

Within those broad areas, what are you attempting to achieve with your data? Here is a sample list to consider:

• Provide access to more users

• Create more transparency about the flow of data through the organization

• Increase the accuracy of external data

• Determine what your rights are for using the data

• Remove a data silo and integrate the information into other parts of the organization

• Create a central source of truth for data

• Restrict sensitive information

• Track and categorize the sensitivity of data

• Establish a set of rules for deleting data

• Revoke an employee’s access to data upon job termination

• Institute a set of data rules for a government compliance audit

For compliance and security reasons, identifying and restricting sensitive information is always a good starting point! - ASSESS AVAILABLE TOOLS AND SKILLS

Does your organization have the skills and tools needed to execute its data governance program? You will need people with skills in data modeling and architecture, business analysis, program and project management, database administration, and report development. You will also need tools for data modeling, data cataloging and management, data movement (for example, a data pipeline), data quality control, and data reporting and analysis. - IDENTIFY AND ADDRESS CAPABILITIES AND GAPS

To fill all the identified gaps, purchasing the correct resources and hire in-house specialists, or use partner tools and third-party specialists. - EXECUTE

Ready to inventory your data? Take stock of what data you have, and where it resides.

Which people have access, and how do they use it? Companies often start with data modeling using diagrams, symbols, and textual references to represent the way the data flows through a software application or the data architecture within an enterprise.

A centralized metadata repository will help you locate and secure your most sensitive data. It will also help you understand and manage the lifecycle of your data so you can ensure its proper disposal at the end of life.

By ensuring your organization’s own data governance and security through partnering only with security-obsessive vendors in your multi-cloud journey, your organization will be able to reap and optimize Cloud benefits for greater agility and opportunities.