In the digital economy, where voluminous data need to be effectively managed, we need a different approach to the legacy issue of managing data from multiple sources.

The rise in enterprise cloud computing, e-commerce, the vibrant and developing enterprise applications market are all contributing to the exponential growth of data.

As data volumes increase exponentially, the ability to store data cost efficiently, and then be able to access it efficiently without a loss in productivity, is a key challenge. Meanwhile, the rapidly developing regulatory oversight and compliance legislation by governments in the region is contributing to the increase need for data management and protection.

What’s more, organizations that are slower in addressing proper management of their data risk losing out in a key area of digital transformation – extracting value from data.

Enterprise IT teams are feeling the pressure to keep up. As backup data volumes increase, companies are having to find cost-effective solutions to store and access enterprise data that are often disparate and distributed.

Noting that Cohesity has a uniquely and highly effective approach to integrated data management in the data center, at the edge and in the cloud, DigiconAsia gleans some expert insights from the up-and-coming data management leader’s Vice President for Asia Pacific, William Ho.

Why is data management critical for digital transformation?

Ho: Digital transformation (DX) is at the top of the list of strategic objectives for most organizations. However, one key aspect of DX that is often overlooked and causes problems when not adequately addressed is the issue of data management. Before you can reap the benefits of an organization’s data – which is the core of DX – one needs control over it first.

By not having a centralized consistent view of data and easy access to that data for applications that require it, organizations will not be able to maximize the value of data for tools such as analytics, machine learning and artificial intelligence.

So, in many ways, digital transformation and data management are inextricably linked.

What are some challenges faced by CIOs today?

Ho: In today’s COVID-19 era, CIOs and IT teams are dealing with multiple challenges: enabling a virtual and hybrid workforce, shrinking budgets, and requests to accelerate digital transformation projects. It is not easy and credit to them for managing the pace of change under such trying circumstances.

Meanwhile, data continues to grow exponentially leading to escalating storage costs. Many CIOs are exploring ways to deal with this data sprawl while reducing total cost of ownership. That’s why many IT leaders are turning to software-defined data management solutions that will enable them to modernize data infrastructure, reduce compliance and security risks, and make data more productive.

Now, more than ever, CIOs are key business drivers, leading innovation and enabling new services and applications that help their organizations accelerate their digital transformation.

Hence, future-proofing investments with the right data management solutions will significantly address some of these challenges while advancing new opportunities.

What benefits does an organized and proactive data management system bring for companies?

Ho: Data is growing at an exponential rate. However, most enterprise data is fragmented across multiple locations on-premise, in various clouds, and backup systems. It is trapped in infrastructure silos or buried in long-forgotten systems. This challenge is called mass data fragmentation.

With mass data fragmentation, copies of the same data often exist across backup, test and development, and analytics making it inefficient and costly to store and manage. Often this data is dark and it is time consuming or difficult to derive insights. In short, before you can reap the benefits of your data, you need to have control over it first.

With our web-scale platform, for example, we offer organizations a simple way to protect, manage, and derive value from their data. This platform solves mass data fragmentation by bringing data silos together, managed through one user interface. Our platform also enables organizations to do more with their backup data, using it, for example, to run rapid test and development projects or derive insights by running analytics. The platform reduces the costs of managing data and backups, helps ensure service level agreements are met, and helps businesses run more efficiently.

Why is data management essential to mitigating business risks, particularly around data governance and protection?

Ho: Today, organizations are very reliant on their data infrastructure. Any downtime in either the data infrastructure or applications can have a significant impact on revenue and productivity. Similarly, ransomware or cyber-attacks have become major issues in Asia. This not only puts organizations and their customers at risk but also erodes brand value.

In addition, with the advent of regulatory requirements including General Data Protection Regulation (GDPR) in Europe, Singapore’s Personal Data Protection Act (PDPA), organizations today need to view, and mitigate, data privacy breaches as a real business risk.

There are a two key factors to consider:

- First, the data itself – does the organization have visibility to what sensitive data they might have and where it is? Many organizations struggle with this. Quite often, multiple copies of the same data are sitting in disparate and distributed locations including the data centre and the cloud. As a result, organizations are unable to comply with regulatory guidelines comprehensively without incurring significant costs and additional processes.

- Another factor to consider: how quickly can the organization recover from an incident impacting IT data operations? Every minute and every second is critical to the business. This is where legacy solutions lack the ability to scale to meet recovery time objectives especially when attempting to recover thousands of virtual machines in minutes.

What are the various levels of data and how does storage, retrieval and archive impact the ability to analyze these sources to derive insights?

Ho: Data can be classified namely into two main areas – primary and the remaining enterprise data.

- Primary data: typically important application data that is used for day to day operations of any business. This data is usually transactional and is often stored in a low-latency storage location where fast access is critical. However, you generally cannot store vast amounts of data due in these locations due to scale and cost. Usually this is about 20% of an organisation’s overall data.

- Remaining enterprise data: may also include some business critical data but this data may not have the same time /access requirements of primary data on a day to day basis. It is often comprised of data that’s used for backup, test and development, analytics, file shares and object storage. This data is often fragmented, trapped in silos, inefficient and dark. Many organizations struggle with visibility of this data and this is further complicated when multiple copies of the same data exist across various silos. Nearly 80% of any organisation’s data falls under this area.

With the demands for insights, analysis and regulatory requirements ever increasing, IT teams are asked to deliver access to all levels of data at various times and usually on short notice. Today, IT teams are working with organizations that are more nimble and responsive and they must keep up with their access needs to data and information.

However, this efficiency comes at a cost. The pressure of squeezed release-cycles combined with fast-changing application architecture has, in many cases, left a trail of data silos, out-of-date applications, security failures, non-compliance, and ballooning cloud bills.

Hence a solution that enables visibility across all sources of data to provide a single global view for companies to actively manage backups, file shares, object stores and data for development / testing and analytics would be the ideal approach.

This would accelerate organizational and IT productivity by providing control and visibility over data and apps in the data center, in the cloud, and at the edge. Ultimately it enables the organization to derive meaningful insights from the data for proactive planning.

How should organizations approach data management effectively while lowering costs?

Ho: Data management can mean many things to different stakeholders, depending on their role within the organization. At the heart of it, it is about backing up, storing, managing, securing and gaining insights from data in an efficient manner.

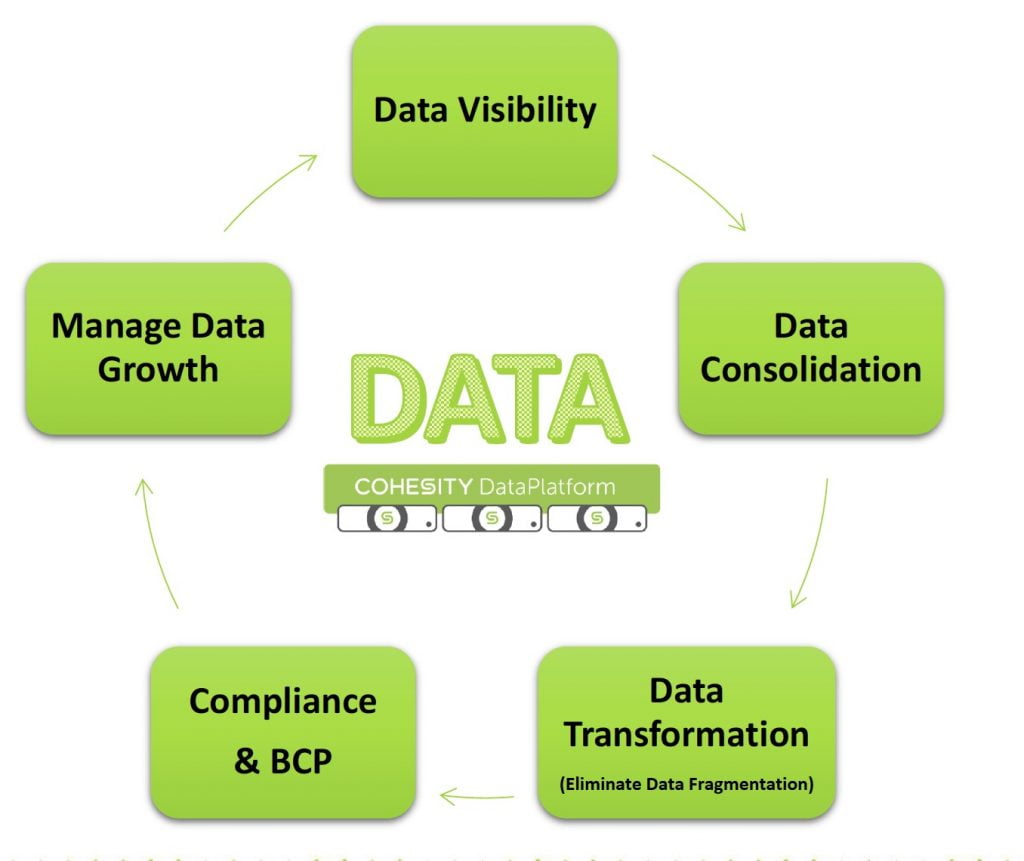

For organizations to manage their data efficiently and reign in the rising cost of storage, the following 5 areas should be addressed:

Data visibility: Firstly, organizations need to have visibility to it – what information is stored where, how secure is it and how long has it been there?

Data consolidation: Once this is done companies can now start to consolidate the silos of infrastructure and data to gain efficiencies. A web scale platform like Cohesity’s, allows organizations to significantly reduce their storage costs whether on-premises or in the cloud.

Data transformation: How do I protect, manage and secure this data? How do I eliminate silos of information and copies to enable true data transformation? Then, equally as important, how do I derive insights from data? These are critical issues that modern data management solutions can address. Cohesity is unique in its ability to be able to help companies backup, manage, and derive value from data by providing global visibility and Google-like search capabilities.

Compliance and business continuity planning: Once visibility of data is achieved and organizations know how to derive value from the data, they can now retire data that is unused and manage what’s required for compliance. As a result, storage costs are reduced significantly.

Today many organizations struggle with deleting data as they lack visibility around what should be kept and what can be deleted.

Manage data growth: Finally, when the organization has achieved the above stages, it regains control over its data and is now positioned for greater business growth.

What are some tips or advice you would share with organizations in the Asia Pacific region?

In my opinion, organizations looking to future-proof their data management capabilities should consider the following:

- Simplicity and scalability: A data management solution should enable backups to be simple, fast, intelligent and efficient. It needs to be scalable allowing you to future proof investments without requiring a major overhaul/upgrade which is costly and time consuming. It needs to be intelligent, protecting the organization needed.

- Predictable recovery at scale: Recovery time is critical as it impacts the business. This is an area we see a lot of companies not putting real world environments to the test. Think about recovering 1000’s of virtual machines in minutes.

- Insights from backup: Organizations should be able to derive insights from their backup data, not just store it. Backups are now a source of business insight, test data for developers, and a source for checking regulatory compliance readiness.