Multilingual, voice-enabled virtual agents are geared to keep customers closer to home in the Asia Pacific region.

Voice and speech recognition technology has advanced in leaps and bounds, especially with the help of technologies such as natural language processing and artificial intelligence.

Enterprises dealing with a customer-base speaking multiple languages are quick to see the benefits. Technology giants are also researching deeper into voice-based technologies as a part of their future-ready business strategies. This is especially relevant for the highly diversified multilingual Asia Pacific market.

According to Uniphore, the next wave of voice-tech is well under way – voice and speech tech are now expanding to target non-English languages and into multiple languages and dialects, to engage customers. Uniphore is building solutions that deliver precise understanding of natural language processing and speech recognition combined with artificial intelligence and automation.

DigiconAsia discussed the exciting developments and the future of voice technology with Ravi Saraogi, Co-Founder and President, Uniphore APAC.

In your opinion, why do you think industry analysts believe that the global speech and voice recognition market will grow at a CAGR of 17.2% to reach US$26.79 billion by 2025?

Ravi: As machines around us get smarter, the best way for us to communicate with them is not to change our behavior to touch or gesture, but to communicate through our voice. It’s how we express emotion, resolve our problems, how we work and live.

Businesses understand that conversational Artificial Intelligence (AI) software has the ability to transform enterprises for business gains and many were keen to start adopting voice-enabled devices. However, the limited scope and accuracy of such software has been an Achilles heel for developers and scientists. Unreliability was often the major talking point in the past when it came to Speech Recognition tools.

However, thanks to emerging technologies, such as AI and Machine Learning, natural language processing and automation, conversational AI is now becoming more accurate and enables multiple dimensions in analytics. That is why you see more brands integrating voice-enabled payments and transactions. It is to deliver superior customer experience and stand out from all the noise and clutter in the industry.

How important is multi-language voice recognition for the future of business? Which industries would benefit the most from the technology?

Ravi: Across Asia, people speaking nearly 2,300 living languages including many widely spoken languages that we may not be as familiar. In India alone, there are 22 major languages with over 720 dialects. So, imagine the market potential we’re looking at when we consider these many languages! Businesses can never deliver excellent customer service when they can’t even understand customers in the language that they’re most comfortable with. That is a very important factor at the beginning and throughout the overall customer journey.

Multi-language voice recognition applies to many different industries. The industries that will stand to gain the most are banking, insurance, healthcare, travel, e-commerce, and retail. These are industries that tend to have large contact centers, and also industries where customers tend to contact brands the most. In banking for instance, it’s important that businesses capture transaction requests accurately. As with e-commerce and retail, as I’ve mentioned previously, more and more home devices are getting smarter. They’re enabling sales on behalf of the user with just a simple voice request.

Please share some examples of how AI, natural language processing, speech recognition and automation would help in customer service.

The future of customer service has arrived. With Uniphore’s Conversational Service Automation platform, we integrate all three of our solutions into one seamless journey for clients and their customers.

For instance, an insurance customer makes a claim online about his recent traffic accident. Logging in to the insurance company’s portal, our conversational security solution amVoice™ instantly authenticates using the customer’s own voice. The digital agent is a secure and automated identity verification system that uses an individual’s unique voice-biometrics to authenticate them, assuring maximum protection against identity theft and fraud. Capturing over 30 parameters of an individual’s voice, including frequencies not audible to the human ear, irrespective of device, language and accent. It’s a more secure and effective method than fingerprints.

After the customer logs in successfully, akeira takes over the conversation and gives an update on his insurance claim – this is a simple transaction which shouldn’t require a human agent in the first place. However, the customer starts to get frustrated at the slow response time as the claim was made last week and wishes to talk to an agent. Identifying the frustration in his voice, akeira uses empathy-driven, human-like contextual responses. It then hands the conversation over to a human as this turns into a more complex transaction.

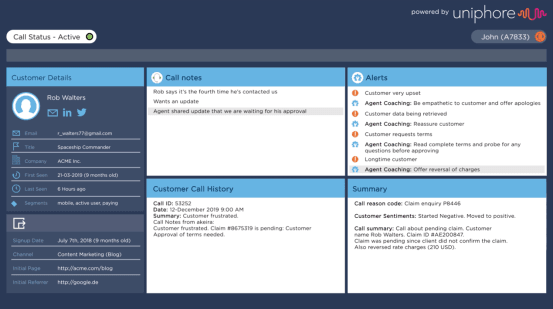

After a seamless hand-off, conversational automation and analytics solution auMina steps in as a powerful tool for agents to resolve customer calls. Advanced AI is used to deliver proactive agent alerts, real-time coaching, automatic note-taking and discovery of caller intent. Intelligent automation capabilities ensure proactive data and information pushes, so agents can deliver the best customer experience without putting them on hold. After the call ends, auMina continues working to complete the interaction by generating a call summary, which is uploaded seamlessly into the CRM system to facilitate automated follow-through, all for better customer care.

What is your vision of the future chatbot or virtual agent?

Ravi: There definitely needs to be human-AI synergy in the new decade. Consider this scenario: A customer starts a conversation with a chatbot for quick self-service. The bot is able to provide some quick and valuable updates based on the customer’s previous interactions. If the conversation gets more complex, the voice bot politely hands the call to a human agent via a live transfer. The agent is assisted through real-time analytics and chat transcripts to be able to make the next best offer that the customer gladly accepts. Exactly like my example above.

Governments, businesses and working citizens all must understand that the role of automation is to increase in productivity of existing call center agents, not replace these agents altogether. My personal hope is that businesses blend the capabilities of people and AI to better understand conversations in real-time.