Surveys: we cannot live without them, but we can take them with more pinches of salt. Here is how…

In today’s digital information and generative AI age, we are bombarded with pre-treated data when we search for it, and bombarded by it even when we do not actively need it.

Out of the overload of information we gather, we need to be cognizant that not all of it is reliable, relevant, and/or capable of deep scrutiny. Some may be useful for a primary school essay, while other materials may not pass muster with academics.

One common data source is the survey — commonly used by brands, research firms, and media outlets to present data and influence public opinion. However, not all surveys are created equal, and some can be misleading or biased. Here is a guide to help readers discern the quality of survey reports and press releases as they consume the news and online sources of opinions.

What a high-quality survey possesses

Just because a spokesperson quotes a survey to back up some assertion does not make any or all of the claims valid now. Therefore, some basic requirements of a survey are:

- Date and time-span information (43%)

- Always check when the survey was conducted. If the date and duration are not mentioned, the findings may be outdated or questionable. If the date of survey is not in a range acceptable for use in a particular time-sensitive scenario, the survey cannot be relevant. Many research firms conduct a survey for a particular time-frame, but the firm that commissioned the survey could later repurpose the results and apply the findings to a future time period in order to sound like they can predict the future authoritatively.

- Profiles of respondents

- Look for details about who participated in the survey. This includes demographics like age, gender, occupation, and geographical spread, especially for international surveys. Were they paid or rewarded in any way? What was the ratio of respondents in each of the geographical, industry-level or job seniority profiles? Could there be much more of one profile in one country’s sample population than in the others? Any imbalances have to be explained, and they can restrict what kind of survey findings are announced about any particular group of respondents.

- Nature of the survey

- Understand how the survey was conducted. Was it anonymous, anonymous-but-easily trackable? Presented in a multiple choice format or with opportunities for open-ended comments? Online, or in-person? The methodology can significantly impact the quality and depth of the results.

- Motivation of respondents

- Check if respondents were rewarded or had any incentives to participate. This can influence how they respond and it can affect the survey’s reliability. Any survey that does not indicate this information and disclaim any artificial influence has to be scrutinized intently.

- Sample size

- A larger sample size generally provides more reliable results. Be wary of surveys with small sample sizes but emblazoned with hyperbole, as the assertions may not accurately represent larger populations and respondent profiles.

- Sampling method

- Look for information on how respondents were selected. Random sampling is usually more reliable than convenience sampling, which can introduce bias.

- Survey sponsor

- Identify who funded or commissioned the survey. Surveys sponsored by organizations with a vested interest in the results may be biased. One notable example of a survey sponsored by a biased sponsor that made the news is the 2016 ExxonMobil climate change survey. This survey was conducted by the company to gauge public opinion on climate change and energy policies. Critics argued that the survey was designed to produce results favorable to ExxonMobil’s interests, potentially downplaying the urgency of climate change and promoting fossil fuel use. Such surveys often face scrutiny because the sponsoring organization’s vested interests can influence the survey design, question wording, and interpretation of results, leading to biased or misleading conclusions.

- Wording of questions

- The most credible surveys publish the questions posed to respondents. Pay attention to how questions were phrased. Leading or loaded questions can skew results and misrepresent the true opinions of respondents. In one poll, one of the questions asked was: “Do you support or oppose the war in Iraq?” This question was straightforward, but another version of the question was phrased as: “Do you support or oppose the war in Iraq to remove Saddam Hussein from power?” By including the justification “to remove Saddam Hussein from power,” the question framed the war in a more favorable light, potentially leading respondents to be more supportive of the war. This subtle change in wording can significantly impact the responses, demonstrating how semantics and phrasing can be used to manipulate survey outcomes.

- Margin of error

- Reliable surveys often include a margin of error, which indicates the potential range of error in the results. A smaller margin of error suggests more accurate findings. Even where a high confidence level is cited, various factors such as sampling bias, non-response bias, response bias, weighting errors, cultural diversity factors and wording methodologies can affect the survey responses and credibility. For example, many US polls predicted a clear win for Hillary Clinton in 2016, but the actual results favored Donald Trump. Certain demographic groups, particularly rural and less-educated voters, were underrepresented in the polls.

- Context and comparison

- Consider the context in which the survey was conducted and compare it with other similar surveys. Consistent findings across multiple surveys can add credibility to the results. Beware of editorialization by the communication firm pitching the survey findings: very commonly, firms can take a particular trend discerned from the parochial data, add their own biased guesses or opinions about that trend even though the cause of the trend is multivariate and could have arisen due to myriad other causes unaddressed by a simple survey. However, just adding editorialization to a clear trend does not make the attempted guess about the cause(s) any more valid than any other guess. The act, however, can fool readers without a critical mind to believe that the editorialized comment (especially if it is not disclaimed as just an “educated guess”) is authoritatively backed by data.

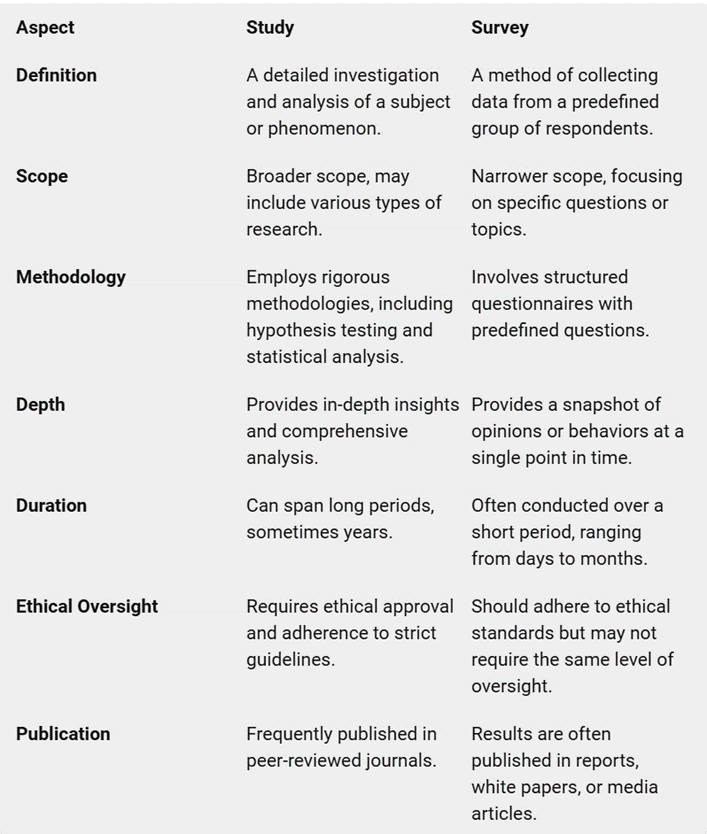

Oh, and one last thing: beware of press releases and communication professionals who do not know the difference between a study and a survey.