Two leaders explore what can happen when inefficiencies such as missing metadata, sub-standard data quality and overlapping data standards are overcome

In a recent white paper hypothesizing how financial markets will leverage big data in near future, it was posited that the industry is at a data management crossroads due to confluence of pandemic-induced global digitalization, socio-political and economic challenges, and the increasing impact of cyber threats.

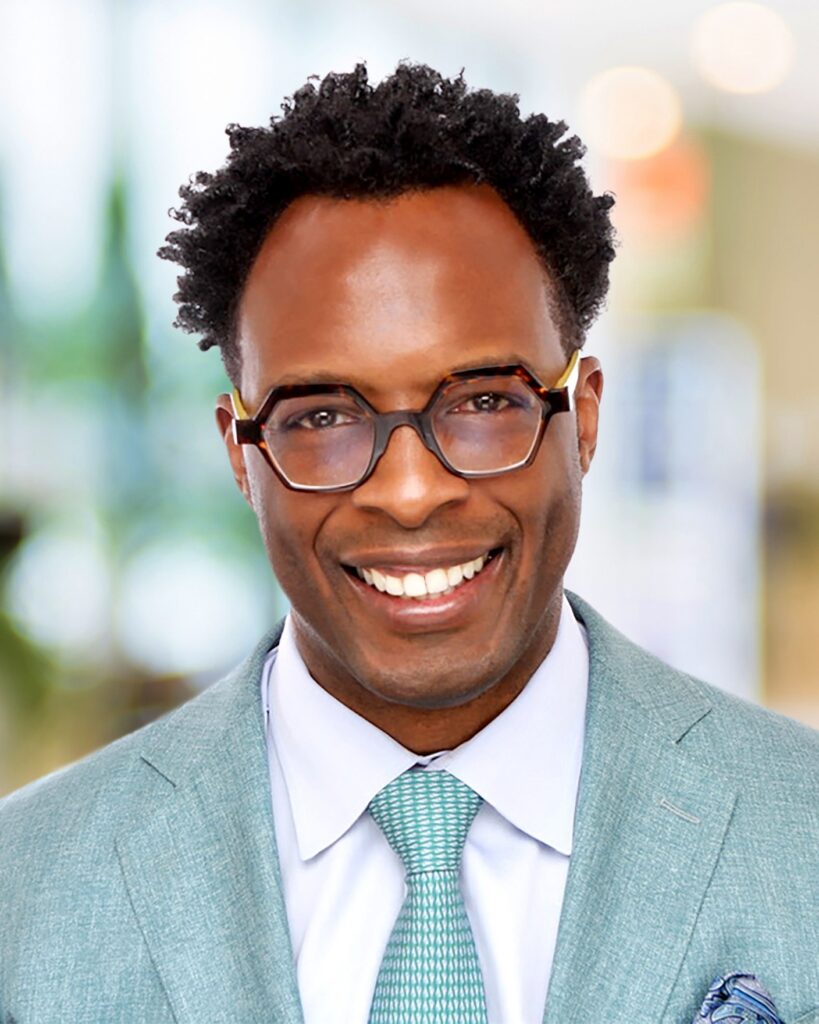

To understand more of the thinking behind the white paper, DigiconAsia.net interviewed two leaders from Depository Trust & Clearing Corporation (DTCC), the source of the white paper: Kapil Bansal, Managing Director and Global Head of Business Architecture, Data Strategy and Analytics; and Jason Harrell, Managing Director, Operational and Technology Risk and Head of External Engagement.

DigiconAsia: What are the key principles that DTCC proposes for unleashing the power of data in the financial services sector?

Kapil Bansal (KB): The financial services industry is undergoing a significant transformation due to the advances around data and technology. For example, the rise of cloud technology has provided a new avenue for financial services firms to store, manage and analyze vast amounts of data efficiently.

As a result of these advancements, the industry now has more data at its fingertips than ever before, but legacy issues hinder the industry from truly delivering upon the value of this data.

Historically, an institution’s data is organized in disparate data stores and it is often transferred point-to-point across data stores, with highly manual data handling, thereby limiting its optimal use. To unleash the power of this legacy data, first, the industry must work collectively with standards bodies and financial authorities to develop clear data standards which include data privacy, data use/collection, data ownership, and data management.

Second, firms should bring their data into a data ecosystem leveraging flexible data access methods that align with these data standards.

Third, firms should continue to embrace automation. We need to get to a place where all data handling is highly automated, providing team members with increased capacity to focus on data insights.

DigiconAsia: According to the Rubbish In, Rubbish Out axiom, how can the quality of data be raised across the board in terms of interoperability, standards and widespread accessibility so that more data is turned into actionable insights?

KB: First and foremost, the industry should align on data standards that improve interoperability across market participant firms. There are several initiatives focused on this area, but a concerted effort needs to be made to align data standards across the various regulatory jurisdictions because the markets are global in nature. Doing so will also help improve data quality for critical functions such as risk management and regulatory reporting.

With the foundational aspects of globally harmonized data standards, more value can be gained when leveraging technologies such as Cloud and AI/ML to generate more advanced insights.

DigiconAsia: Given that the financial sector is one of the most targeted by cybercriminals, and that cybercriminals are also removing data silos and fully utilizing the power of data to target victims and cover their tracks, what in your view are the top three measures the financial industry should get right as a foundation before they are ready to unleash the power of their data?

Jason Harrell: Cyber threat actors continue to leverage sophisticated attacks against the financial services sector and its supply chains for financial gain or to create operational disruptions that could have a material impact to an interconnected financial services sector. In fact, sophisticated cyberattacks are increasingly leveraging legitimate credentials and built-in system tools to gain access into organizations’ networks, and are relying less on malware to conduct these attacks.

In fact, sophisticated cyberattacks are increasingly leveraging legitimate credentials and built-in system tools to gain access into organizations’ networks, and are relying less on malware to conduct these attacks.

Further, the time between a breach and the lateral movement of the threat actor to other systems is decreasing (~90min). These threats are requiring financial institutions and their supply chains to have increased vigilance in protecting their networks. Prioritizing the following three aspects will enhance the controls of financial institutions and third parties.

- Vulnerability management

Sophisticated threat actors use known vulnerabilities and misconfigured systems to compromise an organization’s security defenses and gain a foothold into its networks. Financial institutions must be vigilant and comprehensive in patching their systems and should deploy a comprehensive set of security baselines for their commonly used systems and applications. Further, financial institutions should scan their devices to improve assurance that security baselines are implemented in alignment with these baselines. - Threat hunting

Cyber threat actors are using legitimate credential and built-in tools to install custom exploits onto an organization’s systems. These threat actors are relying less on the installation of malware to execute these attacks and are increasingly using interactive ‘hands on keyboard’ attacks against these systems. Therefore, antivirus and antimalware are just one piece in protecting networks from these attacks. Financial institutions and their supply chains must proactively identify compromised systems that may exist on their network. Threat hunting, the activity of searching the network to identify and isolate advanced threats, is necessary component to protecting an organization’s information resources. - Intrusion detection

Using intrusion detection systems provides highly accurate detection and prompt response by automatically blocking and remediating network intrusions.

Given that threat actors generally require less than 90 minutes to laterally move through the network, financial institutions must deploy this defense tactic to gain the time needed to limit the pervasiveness of the attack.

By employing and strengthening the above capabilities, financial institutions can continue to bolster the security of their network infrastructure.

DigiconAsia thanks Kapil and Jason for sharing their insights.